Can Artificial Intelligence Make the ‘Right’ Decisions on Roads?

Self-driving cars have been put forth as a solution that can make mobility safe, secure, affordable, sustainable and accessible for everyone. But can autonomous driving systems be relied on to make life-or-death decisions in real-time on roads? And is the world ready to be driven around in these vehicles?

These burning questions were tackled in a panel discussion during the AI for Good Global Summit 2020.

Technology Driving the Focus

The automotive industry has long touted self-driving features to customers for safety and comfort on the road.

Tesla recently said a beta version of its ‘full self-driving’ software would be released to a few “expert and careful drivers.” Five years after its prototype debuted on a public road, Alphabet’s Waymo has opened its “fully driverless service” taxi to the public in Phoenix, United States.

Road safety is governed internationally by the 1949 and 1968 Conventions on Road Traffic.

While technology holds promise in averting crashes caused by human error, there are concerns on whether self-driving cars are built to adapt to evolving traffic conditions.

“A lot of the focus now is on technology, and there’s not enough on the user and their traffic environments,” said Luciana Iorio, chair of the UNECE Global Forum for Road Traffic Safety (Working Party 1), custodians of the road safety conventions.

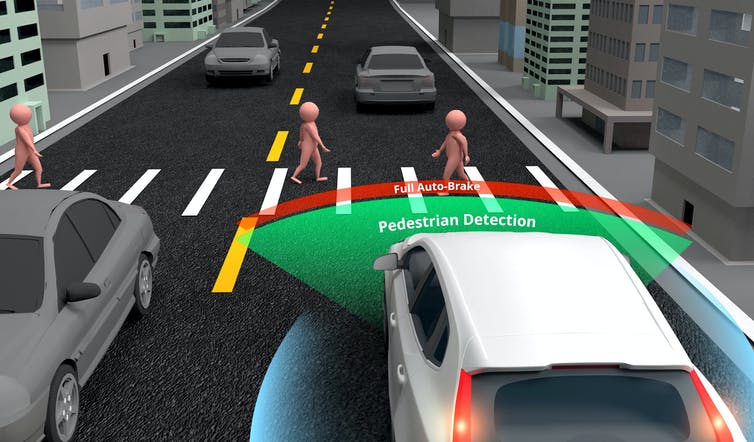

A technology-driven approach does not consider that more than half of all road traffic deaths are pedestrians, cyclists and motorcyclists. Well-designed cities and infrastructure are needed to protect these vulnerable road users.

“Self-driving technology should not create a digital divide and must be a transformational opportunity for everyone around the world,” Iorio added.

Danger also arises when car manufacturers oversell their technology as being more autonomous than it actually is. Liza Dixon, a PhD candidate in Human-Machine Interaction in Automated Driving, coined the term “auto washing” to describe this phenomenon.

SAE International defines vehicles as having six automation levels depending upon the amount of attention required from a human driver. In levels zero through two, humans drive and monitor the traffic environment; in levels three through five, the automated systems drive and monitor the environment.

Reality Versus Hype

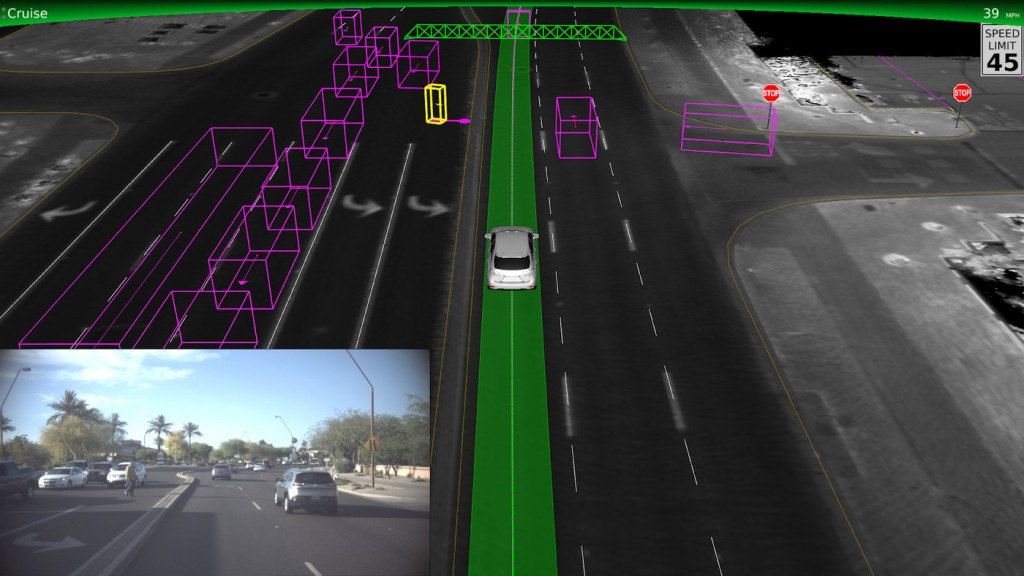

The advanced driver assistance systems (ADAS) in cars today exhibit SAE Level 2 partial automation. Many consider SAE Level 5, where the automated driving system can operate under all traffic and weather conditions as being out of reach, so, for now, the industry is focused on SAE Level 4 with restricted driving conditions, such as small geographical areas and good weather.

Consumers may be unaware of these distinctions. Videos showing people sleeping or watching movies in their cars are creating a dangerous, misguided impression about the technology, Dixon said.

“Every consumer vehicle on the road today needs the driver to be prepared to take over control at all times. The driver must know this is a supportive, cooperative system that augments their ability and not something that takes over their role of driving,” Dixon said.

Language and perception matter too. A study by the American Automobile Association (AAA) Foundation introduced the same driving assistance system to two participants, albeit with different names. One group was told about the “AutonoDrive” system’s capabilities and the other about the limitations of the “DriveAssist” system. The former was more likely to incorrectly believe in the system’s ability to detect and respond to hazards, the study found.

Open Frameworks for Public Safety

There are efforts afoot to fix the lack of industry-wide standards for safe self-driving.

The World Economic Forum’s Safe Drive initiative wants to create new governance structures that will then inform industry safety practices and self-driving cars’ policies. Their proposed framework is centred around a scenario-based safety assurance approach.

“This is predicated on the assumption that the way autonomous vehicles are managed today was piloted through exemptions and non-binding legal codes, which are not sufficient in the long term,” said Tim Dawkins, Lead of Automotive and Autonomous Mobility at WEF.

The safety of autonomous vehicles can only be dictated in the context of its environment, he added.

The ITU focus group on AI for autonomous and assisted driving (FG-AI4AD) is another effort to establish international standards that will monitor and assess the performance of the ‘AI Driver’ steering automated vehicles. It has proposed an international driving permit test for the AI Driver, which requires a demonstration of satisfactory behaviour on the road.

The UNECE’s Working Party 29, which looks at harmonization of global vehicle regulations, has said: “Automated vehicle systems, under their operational domain (OD), shall not cause any traffic accidents resulting in injury or death that are reasonably foreseeable and preventable.”

According to Bryn Balcombe, chair of the FG-AI4AD chair and founder of the Autonomous Drivers Alliance (ADA), terms like “reasonably foreseeable” and “preventable” still need to be defined and agreed while ensuring they match public expectations.

The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems has also worked on broad and specific safety standards across industries, ranging from healthcare and agriculture to autonomous driving.

“We have taken an umbrella approach to creating ground rules to engender trust. We apply different considerations to different case studies and stress-test them,” said Danit Gal, a member of its Executive Committee and a Technology Adviser in the Office of the UN Under-Secretary-General.

Frameworks need to consider the continuously changing nature of autonomous machines, she said, also noting the challenge of aligning international standards to national regulations.

Gal proposed an information-sharing hub for different AI-enabled mobility initiatives to exchange their findings. Balcombe brought up the ITU Global Initiative on AI and Data Commons, promoting sharing of open datasets and results.

“This is to make sure that technology can be developed to deploy broadly and widely and not create and exacerbate some of the divides that we currently have,” he said.

The ‘Molly Problem’ of Ethical Decisions

Public trust and intuitions about AI and its ability to explain decisions made on the road are crucial for the future of AI-enabled safe mobility, according to Matthias Uhl and Sebastian Krügel from the Technical University of Munich.

Together with the ADA, Uhl and Krügel set up ‘The Molly Problem’ survey to support the ITU focus group’s requirements-gathering phase.

An alternate take on the ‘trolley problem’ thought experiment, ‘The Molly Problem’ tackles the ethical challenges to consider when autonomous vehicle systems cannot avoid an accident. Its premise is simple: a young girl, Molly, crosses the road and is hit by an unoccupied self-driving vehicle. There are no eyewitnesses. Next, what the public expects should happen is captured by a series of questions that define ‘The Molly Problem’.

A survey circulated ahead of the panel discussion sought to collect feedback from public members, receiving 300 responses at the time of the event.

Uhl and Krügel found that most respondents wanted the AI system to store and recall information about Molly’s crash. From the time and location of the crash, they also wanted to know the vehicle’s speed at the collision time, when collision risk was identified and what action was taken. Respondents also believed the software should explain if and when the system detected Molly and whether she was detected as a human.

“About 73 per cent of the respondents said that even though they are very excited about the future of autonomous vehicles, they think vehicles should not be allowed to be on the road if they cannot recall this information. Only 12 per cent said these could be on the road,” said Krügel.

An even bigger challenge for the industry to address is that 88 per cent of respondents believed similar data about near misses events should be stored and recalled.

The results from this survey will help identify requirements for data and metrics in shaping global regulatory frameworks and safety standards that meet public expectations about self-driving software.

A Step Towards Explainable AI

Existing event data recorders focus on capturing collision information, said Balcombe. At present, there is no common approach or system capable of detecting a near-miss event, he added. ‘Black box’ recording devices for autonomous cars only indicate if a human or a system was in control of the vehicle or whether a request to transfer control was made.

“It’s about explainability. If there has been a fatality, whether, in a collision or a surgery, the explanations after the event help you build trust and work towards a better future,” Balcombe said.

Krügel believes that these concerns about self-driving need the input of social scientists who can work with engineers to make algorithms ethically safe for society.

Gal acknowledges that different ethical constraints and approaches pose challenging questions to promising solutions. “What happens if we find a system that caters more to a particular audience and someone else is not happy with a decision made during a collision. To what extent are they actually able to challenge it and to what extent can the machine reflect on these ethical decisions and act in a split second?” she asked.

A UN High-Level Panel on Digital Cooperation, which advances global multi-stakeholder dialogue on the use of digital technologies for human well-being, put forth this recommendation in 2019:

“We believe that autonomous intelligent systems should be designed in ways that enable their decisions to be explained and humans to be accountable for their use.”

The discussions from this event will inform the UN panel’s ongoing consultation process on artificial intelligence.

NEXT UP IN NEWS

- Samsung Teams up With Bahrain Raid Xtreme for Maiden Dakar Rally 2021

- Avtovaz to Start Production of the New Lada Niva Travel

- Volvo Cars to Produce Electric Motors at Its Powertrain Plant in Skövde, Sweden

- 2020 Opel Grandland X Named Autos Community’s SUV of the Year in Dubai

- TECHART GTstreet R Makes Thrilling Film Debut in the Netflix Action-Comedy Asphalt Burning

- Lamborghini Squadra Corse Unleashes the SC20

Comments are closed.